Why AI agents fail in production—and how to fix it

AI

LLMs

Agents

Newsletter

Is Data Science Dead in the Age of AI?

AI

LLMs

Agents

Newsletter

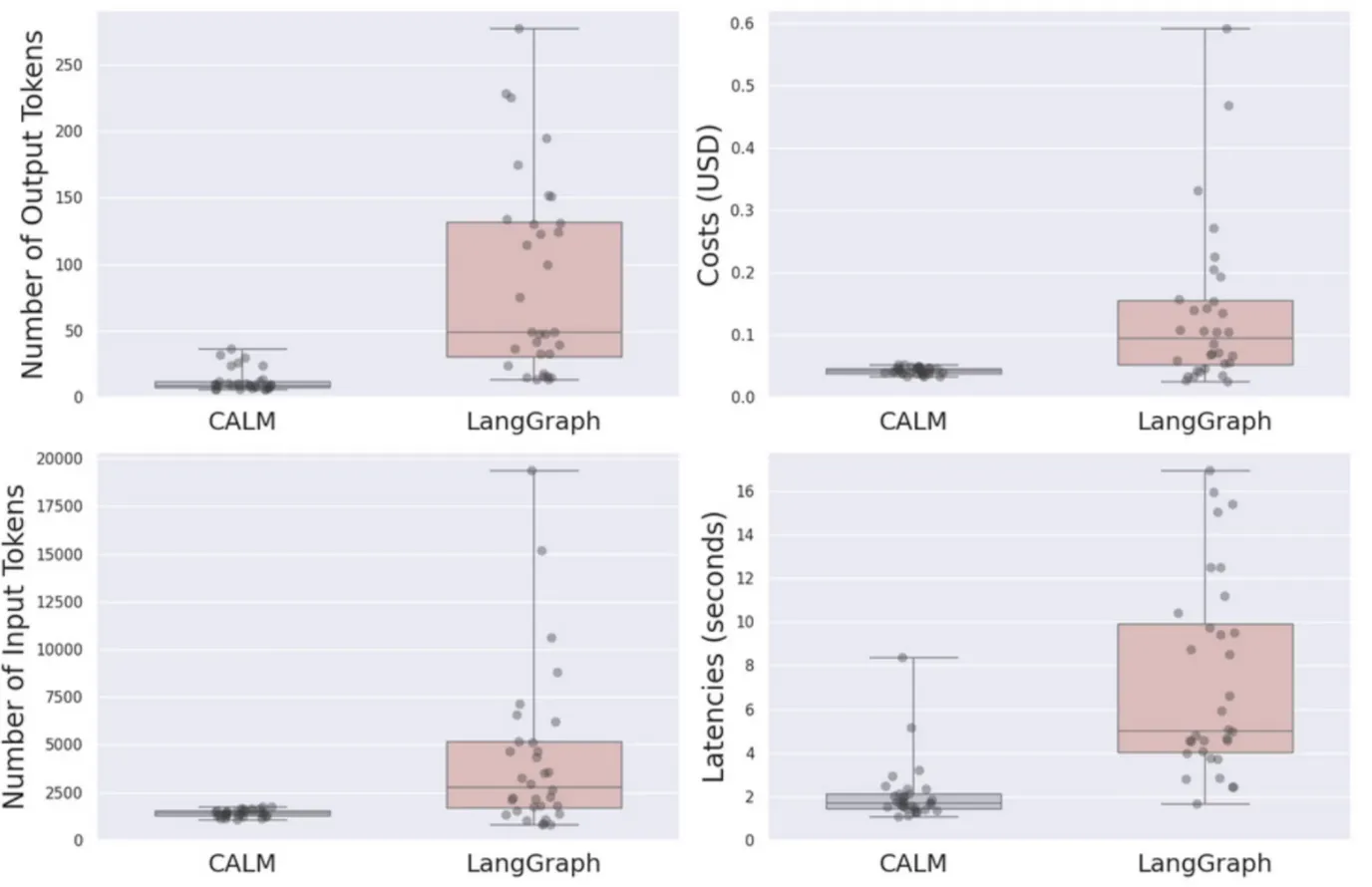

Escaping AI Proof-of-Concept Purgatory

AI

LLMs

Agents

Newsletter

How To Build A Travel AI Assistant That Doesn’t Hallucinate 🤖

AI

LLMs

Agents

Newsletter

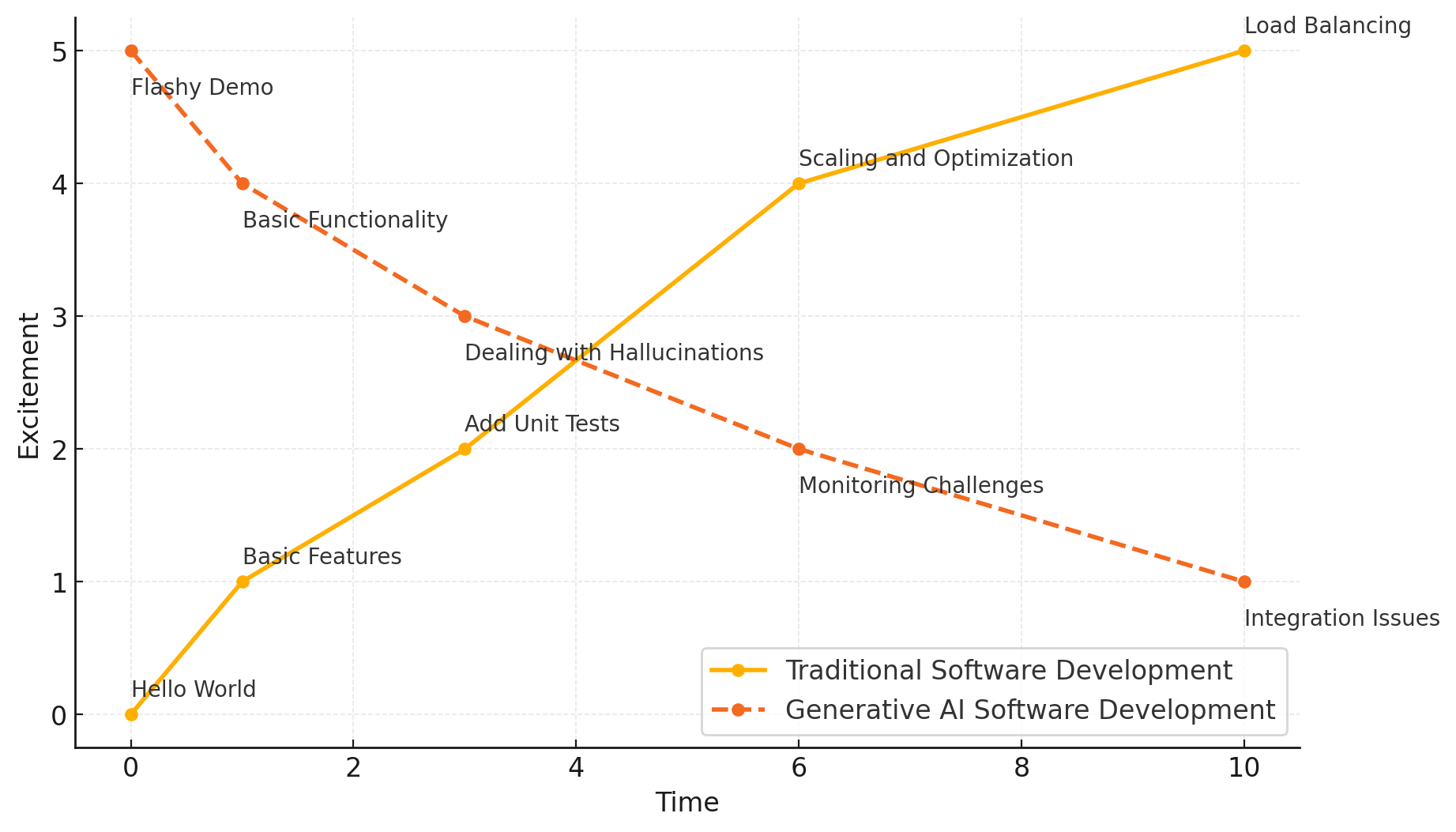

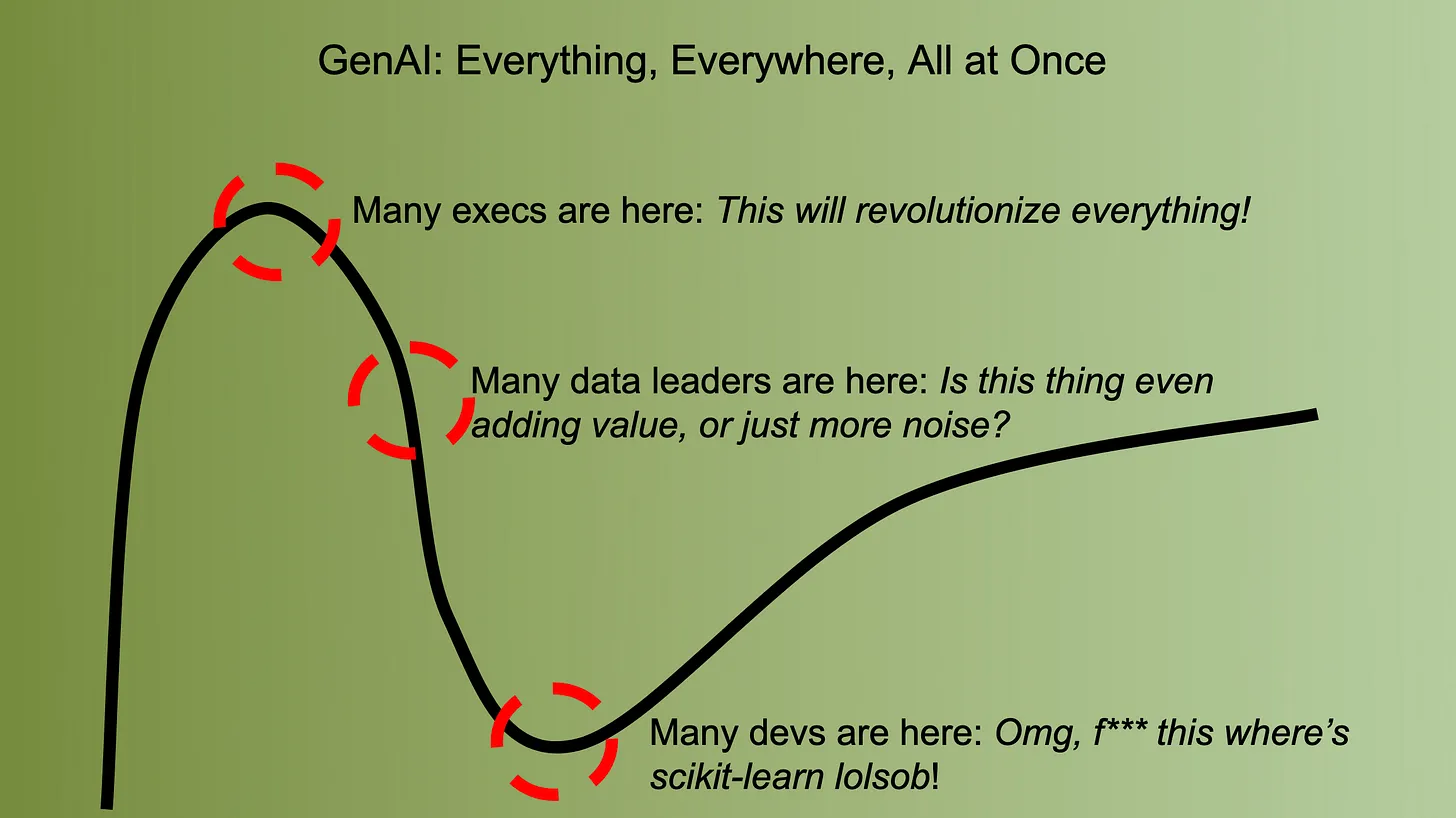

Where are you in the GenAI Hype Cycle?

AI

LLMs

Agents

Newsletter

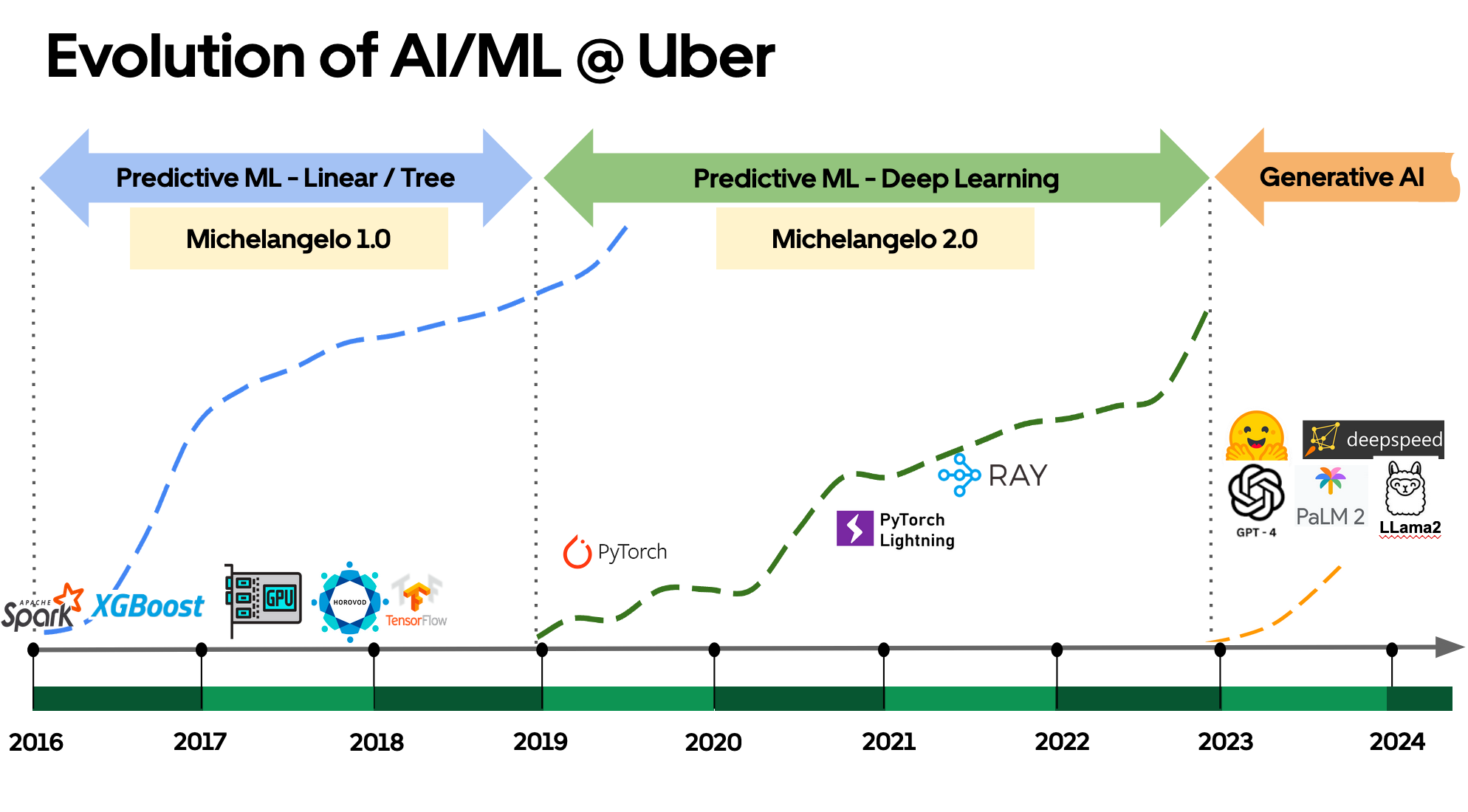

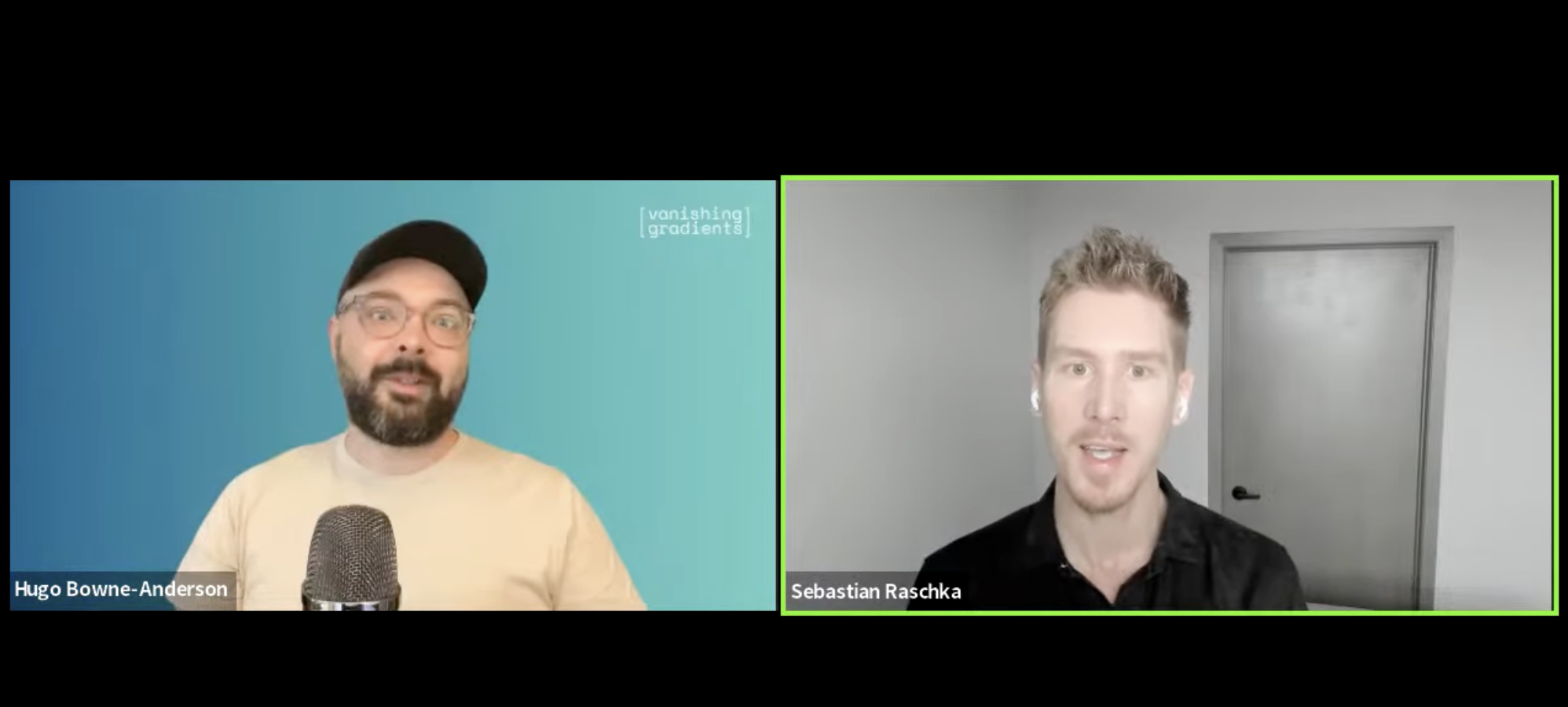

Building Reliable and Robust ML/AI Pipelines

AI

LLMs

Agents

Newsletter

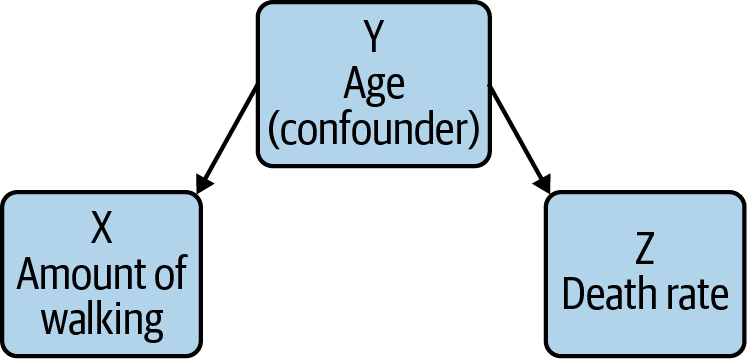

Rethinking Data Science, ML, and AI

AI

LLMs

Agents

Newsletter

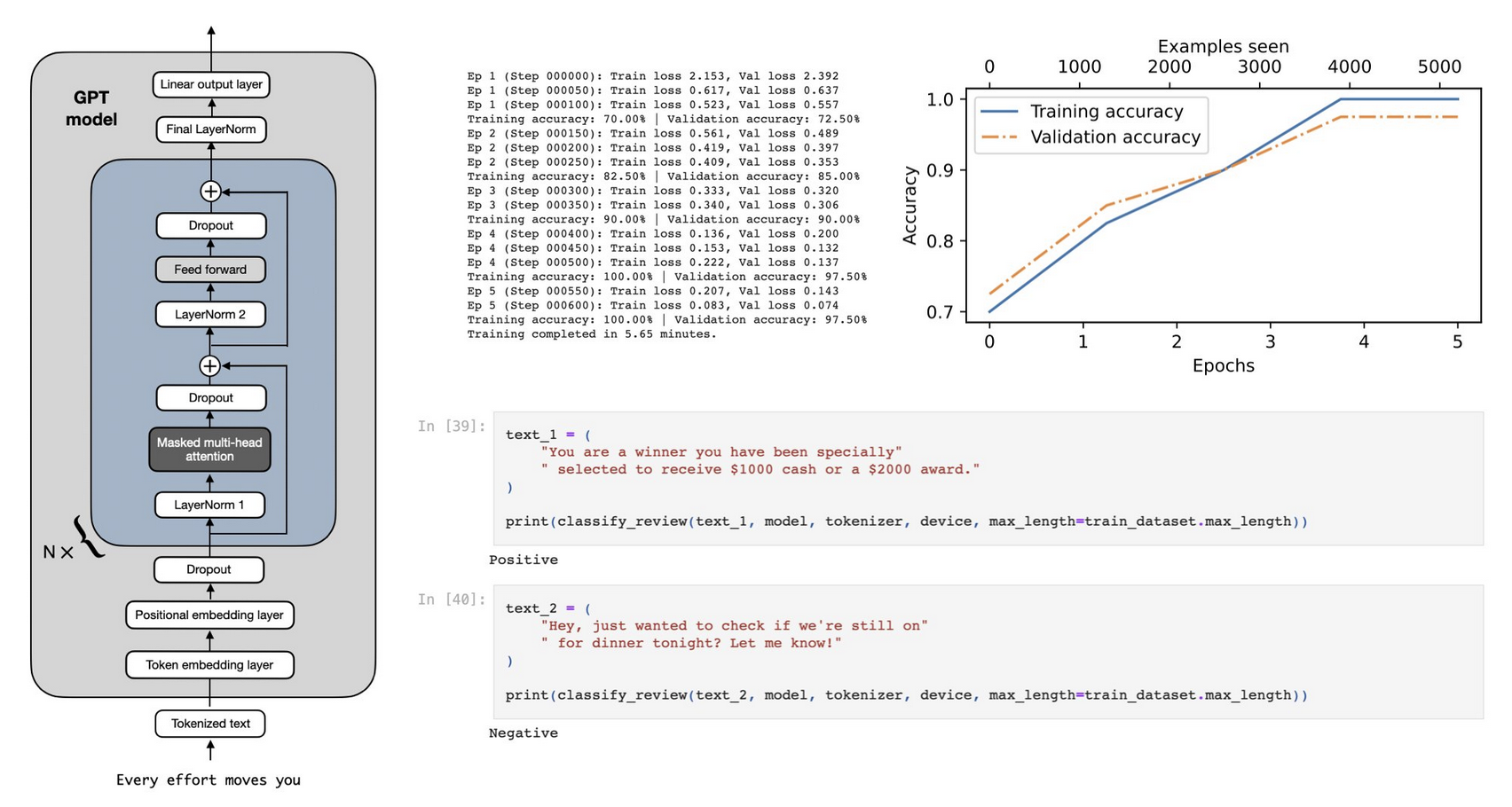

Developing and Training LLMs From Scratch

GenAI

LLMs

10 Brief Arguments for Local LLMs and AI

GenAI

LLMs

No matching items